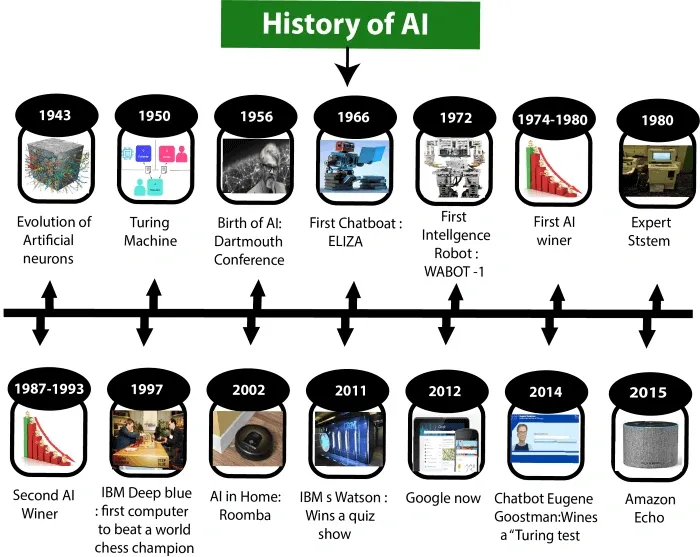

Artificial intelligence started long before the chatbots and image generators we know today. During a historic workshop now regarded as the birthdate of artificial intelligence as a field of study, researchers at Dartmouth College officially coined the phrase “artificial intelligence” in 1956. Though the foundation began in the early 1900s, the 1950s saw a notable shift from theoretical ideas to practical uses.

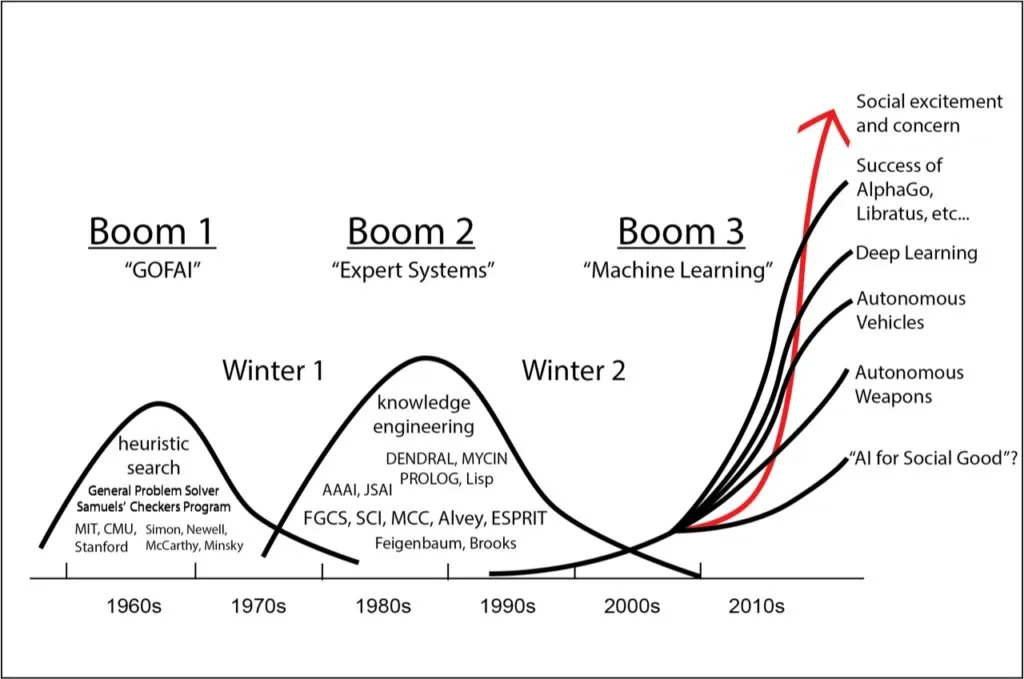

AI development first concentrated on logic-based systems and straightforward games. While Arthur Samuel’s checkers program in 1952 showed machine learning potential by raising its performance over time, the first successful AI program operated on the Ferranti Mark I computer in 1951. Particularly during the “AI Winters” of the late 1970s and early 1990s, when funding fell due to unmet expectations, the field saw remarkable expansion and notable setbacks. Deep learning’s eventual introduction in the 2000s revived AI research; 2012’s AlexNet signalled a turning point in computer vision. From early theoretical foundations to neural networks with billions of parameters, this timeline of artificial intelligence shows how a once-niche academic interest evolved into technology that acquired 100 million users within months of its release.

Early Visions and Theoretical Foundations (Pre-1950s)

Image Source:Medium

“I believe that at the end of the century the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted.” — Alan Turing, Mathematician, computer scientist, and pioneer of artificial intelligence

Ancient Cultures’ Mythical Automatons and Mechanical Beings

For thousands of years, people have dreamed of building intelligent machines. Greek mythology describes Talos, a massive bronze automaton created by the god of smiths, Hephaestus. Talos patrolled the island of Crete three times a day and threw boulders at enemy ships. The mysterious life-fluid known as ichor, which ran through a tube from his head to his ankle, powered this mechanical guardian. Another well-known example is Pygmalion, who created a statue so lifelike that the goddess Venus granted his wish to make it come to life.

Humanity’s ongoing fascination with creating artificial life is reflected in these ancient tales. Furthermore, by the first millennium BCE, Chinese, Indian, and Greek philosophers had developed formal systems of thought that served as the foundation for the idea of mechanical reasoning. The formal methods of logical deduction that would eventually serve as the basis for computational thinking were developed by thinkers such as Aristotle, Euclid, and al-Khwārizmī (whose name inspired the word “algorithm”).

The Turing Test and Alan Turing’s Universal Machine

Alan Turing’s seminal work established the contemporary framework for artificial intelligence. Turing first proposed the idea of the Universal Machine in his 1936 paper “On Computable Numbers, with an Application to the Entscheidungsproblem.” A formal model of computing was presented by this theoretical apparatus, which was subsequently dubbed the Turing Machine. It was a machine that could execute any mathematical operation that could be represented as an algorithm.

Turing went on to publish “Computing Machinery and Intelligence,” another seminal paper that examined the potential for building thinking machines, in 1950. Aware of the difficulties in defining “thinking,” Turing put forth what is now known as the Turing Test: a machine would be deemed to be “thinking” if it were capable of carrying on a conversation that was indistinguishable from a human conversation. Turing boldly predicted that by the end of the century, computers would be so proficient at the “imitation game” that, after five minutes of questioning, an average interrogator would have a 70 per cent chance of correctly identifying the machine.

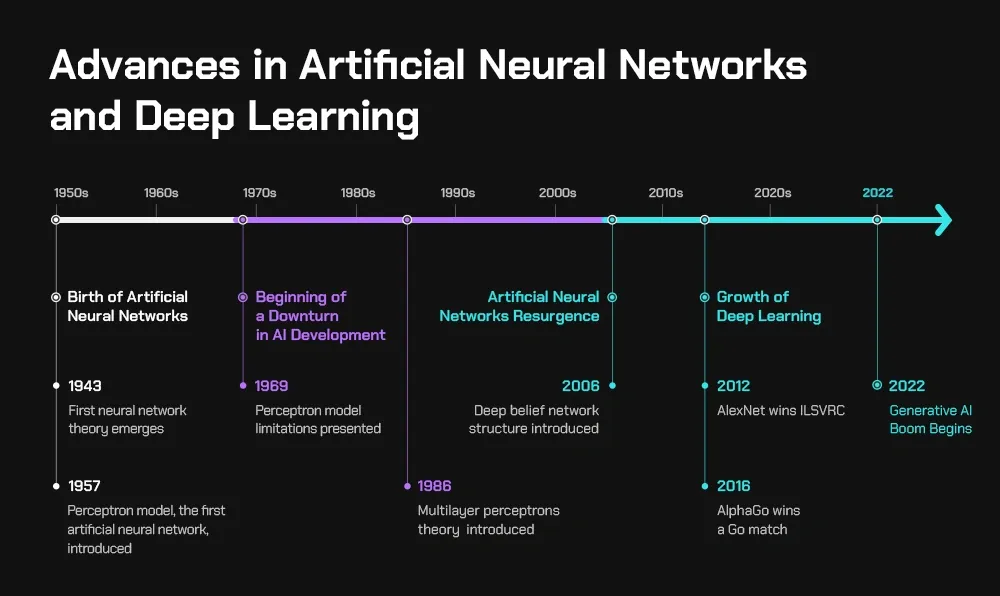

The Neural Model of McCulloch and Pitts (1943)

When logician Walter Pitts and neuroscientist Warren McCulloch published “A Logical Calculus of the Ideas Immanent in Nervous Activity” in 1943, the biological foundations of artificial intelligence began to take shape. They put forth a mathematical model of neural networks in this groundbreaking paper, which would serve as the cornerstone for the advancement of AI.

By treating brain cells as logical units with binary outputs, McCulloch and Pitts developed a simplified model of a neuron. Their research showed how simple logical operations could be carried out by networks of these idealised artificial neurons. This model established a vital connection between biology and computation by demonstrating that neural networks could theoretically compute any function that a Turing machine could calculate.

Despite being simplistic by today’s standards, the McCulloch-Pitts model introduced important ideas that are still essential to the design of neural networks. Their research demonstrated that propositional logic could be used to characterise the behaviour of any neural network, with the necessary adjustments for networks with loops or circles. This theoretical framework made later developments in machine learning, such as Frank Rosenblatt’s perceptron in 1958, possible.

The Birth of Symbolic AI and Logic-Based Systems (1950s–1970s)

Image Source:Jesus Rodriguez – Medium

By the middle of the 1950s, the theoretical underpinnings of artificial intelligence had evolved into real-world uses, ushering in the era of symbolic AI based on rule-based systems and logic.

Dartmouth Conference and the Coining of ‘Artificial Intelligence’

A significant event that would shape the field for decades to come took place at Dartmouth College in the summer of 1956. Leading thinkers gathered to discuss the potential of “thinking machines” at the Dartmouth Summer Research Project on Artificial Intelligence, which was organised by John McCarthy, Marvin Minsky, Claude Shannon, and Nathaniel Rochester. McCarthy introduced the term “artificial intelligence” to the scientific community for the first time by specifically selecting it for this meeting. The workshop, which essentially served as a prolonged brainstorming session, lasted roughly six to eight weeks.

“We propose that a 2-month, 10-man study of artificial intelligence be carried out…,” read the bold proposal for this historic meeting. The hypothesis that every facet of learning or any other aspect of intelligence can, in theory, be so accurately described that a machine can be created to mimic it will serve as the foundation for the study. Many now refer to this meeting as the “Constitutional Convention of AI.”

Logic Theorist and General Problem Solver by Newell and Simon

The Logic Theorist, which is frequently regarded as the first artificial intelligence program, was already under development by Allen Newell and Herbert Simon before the Dartmouth conference. This groundbreaking system, which was finished in 1956, was able to prove 38 of the first 52 theorems in Whitehead and Russell’s Principia Mathematica. For some of them, it even found more elegant proofs. Bertrand Russell is said to have “responded with delight” when he was shown one of these computer-generated proofs.

In 1957, Newell and Simon developed the General Problem Solver (GPS) to build on this achievement. GPS was a major development in AI design and used means-end analysis, in contrast to the Logic Theorist. Notably, it was the first program to isolate its problem-solving methodology (the solver engine) from its problem knowledge (represented as input data). Due to computational constraints, GPS struggled with real-world applications, even though it was able to solve well-defined problems like the Towers of Hanoi.

LISP Programming Language for AI (1958)

In 1958, John McCarthy created the LISP programming language, which was another important advancement in AI. Second only to FORTRAN as one of the oldest high-level programming languages still in use today, LISP was developed especially for artificial intelligence research. LISP is especially well-suited for symbolic computation because it was developed by McCarthy using the mathematical theory of recursive functions.

Tree data structures, dynamic typing, automatic storage management, and higher-order functions are just a few of the computer science ideas that LISP invented. Programs were able to manipulate other programs as data thanks to their unique, fully parenthesised prefix notation, which was essential for the development of AI. For many years, LISP was the most widely used programming language in AI research thanks to this invention.

ELIZA and Early Natural Language Processing

ELIZA, an early natural language processing program that would become a landmark in human-computer interaction, was first developed in 1964 by Joseph Weizenbaum at MIT. By using pattern matching and substitution to mimic conversation, ELIZA gave the impression that she understood the material without really doing so. By reflecting users’ statements as questions, the DOCTOR script, its most well-known implementation, imitates a Rogerian psychotherapist.

Surprisingly, many users developed an emotional bond with ELIZA, sometimes forgetting they were speaking to a computer, even though Weizenbaum maintained that ELIZA lacked true understanding. Humans’ propensity to attribute comprehension and empathy to machines exhibiting even basic conversational skills was demonstrated by this phenomenon, which was later dubbed the “ELIZA effect.” One of the first chatterbots and one of the first programs to try to pass the Turing Test was ELIZA.

Expert Systems and the First AI Boom (1980s)

Image Source:Schneppat AI

The 1980s marked a pivotal era for artificial intelligence as knowledge-based expert systems moved from academic research into profitable commercial applications, triggering the first major AI boom.

Rise of Knowledge-Based Systems like MYCIN and DENDRAL

The field of artificial intelligence moved toward specialised knowledge-based systems after early AI’s emphasis on general problem-solving. The first successful expert system was DENDRAL, created in 1965 at Stanford University by Bruce Buchanan, Joshua Lederberg, and Edward Feigenbaum (often referred to as the “father of expert systems”). This innovative program used data from mass spectrometry to identify unknown organic molecules. Due to DENDRAL’s success, MYCIN was developed in the early 1970s and was able to diagnose bacterial infections and prescribe antibiotics with an accuracy level that was on par with that of medical specialists. By emphasising domain-specific expertise over generalised reasoning, these systems marked a significant paradigm shift in AI research. “We were trying to invent AI, and in the process discovered an expert system,” as Lederberg put it.

Commercial Success of XCON and R1

The transition from research to commercial viability occurred most dramatically with XCON (eXpert CONfigurer), also known as R1. Developed by John McDermott at Carnegie Mellon University in 1978, XCON helped Digital Equipment Corporation (DEC) configure computer systems based on customer requirements. The system went into production in 1980 at DEC’s plant in Salem, New Hampshire. By 1986, XCON had processed 80,000 orders with 95-98% accuracy and saved DEC approximately USD 25 million annually by reducing configuration errors. This commercial triumph sparked widespread corporate interest, with approximately two-thirds of Fortune 500 companies implementing expert systems in their daily operations by the mid-1980s.

Fifth Generation Computer Systems Project in Japan

Consequently, international interest in AI surged. In 1982, Japan launched its ambitious Fifth Generation Computer Systems (FGCS) project through the Ministry of International Trade and Industry (MITI). This 10-year initiative aimed to develop computers based on massively parallel processing and logic programming to create “an epoch-making computer” with supercomputer-like performance. Despite investing approximately USD 400 million, the project ultimately failed to meet its commercial goals. Nonetheless, the FGCS project significantly contributed to concurrent logic programming research and prompted increased AI funding globally, including the formation of the Microelectronics and Computer Technology Corporation in the United States.

AI Winters and the Shift Toward Machine Learning (1990s–2000s)

Image Source:Perplexity

“Machines take me by surprise with great frequency.” — Alan Turing, Mathematician, computer scientist, and pioneer of artificial intelligence

Collapse of LISP Machines and Funding Cuts

The Second AI Winter began dramatically in 1987 with the sudden collapse of the specialised AI hardware market. Desktop computers from Apple and IBM had become more powerful and significantly cheaper than the specialised Lisp machines designed specifically for AI programming. Companies like Symbolics, which had invested heavily in specialised AI hardware, struggled financially, with Symbolics eventually filing for bankruptcy in 1991. Simultaneously, major government initiatives scaled back their AI components—Reagan’s Strategic Defence Initiative reduced its AI funding, while Japan’s Fifth Generation Computer Systems project and the U.S. Strategic Computing Initiative both failed to meet their ambitious AI goals.

Backpropagation and the Revival of Neural Networks

Amid this winter, the seeds of revival were taking root. Neural networks, which had faced criticism and neglect since the publication of Minsky and Papert’s Perceptrons in 1969, experienced a renaissance. Arthur Bryson and Yu-Chi Ho had described backpropagation learning algorithms as early as 1969, but these techniques gained renewed attention in the late 1980s and early 1990s. This algorithm enabled multilayer artificial neural networks to learn from their errors, essentially allowing machines to improve performance over time through experience rather than explicit programming.

TD-Gammon and Reinforcement Learning Breakthroughs

A watershed moment came in 1992 when Gerald Tesauro at IBM developed TD-Gammon, a neural network that taught itself to play backgammon through self-play. Starting from completely random play, TD-Gammon played against itself approximately 1.5 million times, eventually achieving a performance that rivalled top human players. What made TD-Gammon remarkable was that it discovered strategies humans had previously overlooked or erroneously ruled out. For example, it found that the more conservative opening move of 24-23 was superior to the conventional “slotting” strategy with certain rolls. Tournament players who adopted TD-Gammon’s unconventional approaches found success, validating the system’s discoveries.

TD-Gammon demonstrated that reinforcement learning—a method where systems learn optimal behaviors through trial and error—could solve complex real-world problems. This breakthrough helped shift focus from symbolic approaches toward statistical methods and machine learning, heralding a new era where algorithms could discover knowledge rather than having it programmed explicitly.

Modern AI: Deep Learning, LLMS, and Generative Models (2010s–Present)

Image Source:SK hynix Newsroom

Deep learning transformed artificial intelligence in the 2010s, shifting from theoretical principles to practical applications that surpassed human performance across multiple domains.

ImageNet and the Rise of Convolutional Neural Networks

The modern AI revolution began in 2012 when AlexNet, a deep convolutional neural network with 60 million parameters, won the ImageNet Large Scale Visual Recognition Challenge. Trained on 1.2 million high-resolution images across 1000 categories, AlexNet achieved unprecedented accuracy, reducing error rates by 9.8 percentage points compared to its nearest competitor. This success relied on innovative techniques, including dropout regularisation and Relu activation functions, which became standard in neural network design. AlexNet’s dominance demonstrated that neural networks could effectively learn from massive datasets when provided sufficient computational resources.

AlphaGo and Strategic Game Mastery

In March 2016, DeepMind’s AlphaGo defeated world champion Lee Sedol 4-1 in a widely watched match that showcased AI’s strategic capabilities. AlphaGo combined deep neural networks with Monte Carlo tree search algorithms—one network selected potential moves while another evaluated board positions. This achievement came a decade earlier than experts had predicted, earning AlphaGo a 9-dan professional ranking from the Korea Baduk Association. In subsequent versions, AlphaGo Zero surpassed its predecessor without human training data, winning 100-0 against the earlier model.

GPT-3 and the Emergence of Large Language Models

Openai released GPT-3 in 2020, featuring 175 billion parameters—ten times larger than previous models. Trained on 570 gigabytes of text, GPT-3 demonstrated remarkable “zero-shot” and “few-shot” learning abilities, performing tasks without specific training. Its transformer architecture used attention mechanisms to selectively focus on relevant text segments. GPT-3 generated human-like text indistinguishable from human writing 48% of the time in controlled experiments.

For further reading, you may find these articles helpful:

- Essential Skills and Programming Languages for AI Developers

- How to Make Money with AI: Guide to Your First $1,000

- 15 Best AI Tools for Students to Help You Improve Your Grades (2025

DALL·E and Sora: Text-to-Image and Text-to-Video AI

Building on GPT-3’s architecture, Openai developed DALL-E in 2021, generating realistic images from text descriptions. DALL-E employs three neural networks: CLIP recognises text and creates image sketches, GLIDE converts sketches to low-resolution images, and a third network enhances resolution. In 2024, Openai introduced Sora, producing photorealistic videos up to 60 seconds long from text prompts. These generative models represent a significant advancement in AI’s creative capabilities, though challenges remain in preventing misinformation and ensuring ethical use.

Conclusion

We have seen the incredible evolution of artificial intelligence over the years, from theoretical ideas in the 1950s to the complex neural networks with billions of parameters of today. But it hasn’t been a straight line. The field suffered severe setbacks during the AI winters when funding decreased and expectations were not fulfilled, despite times of ebullient growth and progress. However, these difficulties ultimately made AI research stronger by compelling practitioners to reevaluate basic theories and shift toward more efficient techniques.

Perhaps the most important paradigm shift in the development of AI has been the transition from rule-based symbolic systems to statistical machine learning. By laying the theoretical underpinnings and developing the first useful AI implementations, early pioneers like Alan Turing, John McCarthy, and Allen Newell created vital groundwork. Expert systems later proved to be commercially viable in the 1980s, although their drawbacks were eventually discovered. Deep learning’s significant impact in the 2010s was subsequently made possible by the revival of neural networks through backpropagation algorithms and reinforcement learning techniques.

The image recognition of AlexNet, the strategic gameplay of AlphaGo, and the natural language generation of GPT models are just a few examples of how modern AI applications exhibit capabilities that were nearly unthinkable for earlier researchers. As a result, rather than simply following explicit instructions, these systems now discover knowledge by analysing vast datasets to identify patterns. The distinction between human and machine creativity is further blurred by the most recent generative models, such as DALL-E and Sora.

A basic reality emerges from examining this seven-decade evolution: artificial intelligence has consistently reinvented itself, eschewing failed strategies and advancing promising ones. AI has surely evolved from an academic curiosity into a technology that influences our daily lives, even though issues with ethics, disinformation, and responsible deployment still exist. Therefore, the hidden history of AI serves as a reminder that the state-of-the-art systems of today are the result of innumerable researchers whose unwavering work, even in the face of scepticism, enabled these breakthroughs.